Demos (May 9, 10)

The following ten institutions will demonstrate their products and technologies.

- Place

- Atrium (Toyoda Auditorium 1F)

- Date

- May 9: 14:40–16:10 with Poster session 2

- May 10: 16:30–18:00 with Poster session 3

Institutions

-

Image Processing on Mobile Phone Cameras

Huawei Technologies Japan K. K.

-

Applications of Computer Vision and Machine Learning at DWANGO

DWANGO Co., Ltd.

-

Video analytics utilizing big data in stores on ABEJA platform

ABEJA, Inc.

-

Real-time Computer Vision for Driver Assistive Systems

Cambridge Research Laboratory, Toshiba Research Europe Ltd

-

Ibeo Scalable Multi Sensor Localization approach for Highly Automated Driving

Ibeo Automotive Systems GmbH

-

Real-time Multi-spectral Material Detection Camera System

Gifu University and ViewPLUS Inc.

-

Morpho Deep Learning System —Demonstration of Deep Learning for Visual Inspection—

Morpho, Inc.

-

Super Wide-Angle 3D Laser Range Sensor

Fujitsu Laboratories LTD.

-

Pedestrian Tracking Using Multiple RGB-D Cameras

The National Institute of Advanced Industrial Science and Technology (AIST)

-

Book exhibit by Springer

Summary

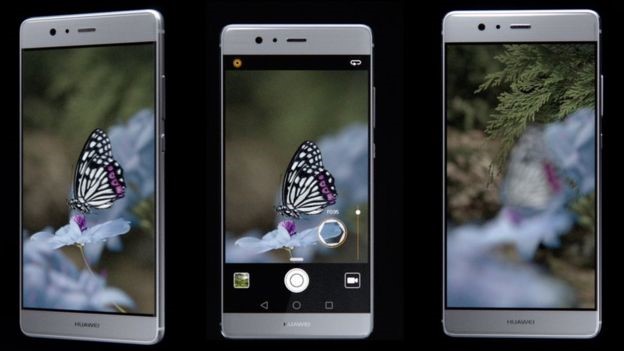

Image Processing on Mobile Phone Cameras

Huawei Technologies Japan K. K.

As mobile phones are getting popular, photography using cameras on those devices is getting common. Huawei technologies, a leading mobile phone manufacturer, is working on technologies to provide professional techniques with consumers. We will show some features for professional-level photography or a new entertainment on mobile phones.

Dual cameras

Huawei P9 has dual cameras: one is for color images and the other is for gray-scale images.

Shallow depth of field

By using them, we can have a trick of obtaining shallow depth of field to simulate a large aperture lens. The use is able to select a foreground object to be focused.

3D face modeling

Huawei Honor V9 has a feature to create an avatar from facial photos. From multiple facial shots, the user is able to create a figure by a 3D printer or an avatar to send through social applications for entertainment.

External Links (in Japanese)

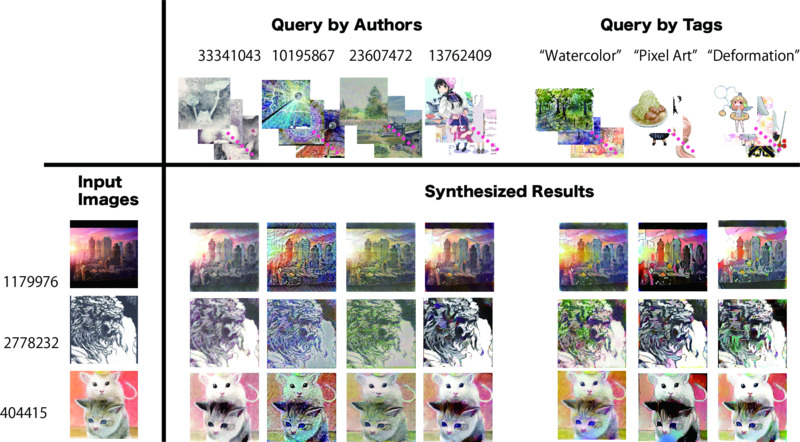

Applications of Computer Vision and Machine Learning at DWANGO

DWANGO Co., Ltd.

DWANGO is a media company which runs UGC (User-Generated Contents) services including videos, live-streams and illustrations. Our research section, Dwango Media Village now focuses on Computer Graphics, Computer Vision and Natural Language Processing to allow creators to improve the quality of their works efficiently.

Our comic colorization system is made for Japanese mangas and it capable of colorizing manga with few reference color images [Fig 1]. Our style transfer system can handle multiple reference images and transfer target styles (i.e. pixel art, watercolor) to videos or illustrations coherently [Fig. 2].

We also introduce our Computer Graphics research for 3D animations and illustration dataset named Nico-Illust.

External Links

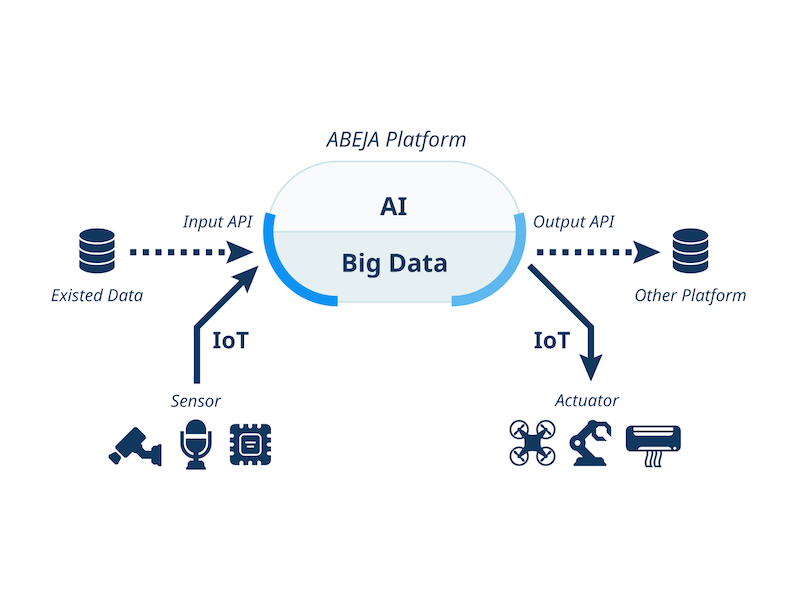

Video analytics utilizing big data in stores on ABEJA platform

ABEJA, Inc.

ABEJA Platform brings together next generation of digital technologies on a single platform, where real world data is collected as well as analyzed on its cloud servers. Data, such as Video and POS/CRM, is simultaneously acquired from retail stores and further processed to provide various analytics including customer behavior pattern, heat-map of store’s hot spots etc. These analytics not only helps store manager understand customer behavior but also provide valuable insights on increasing store’s performance via optimization of operation or better stock management. Moreover, ABEJA platform provides processing of video data of the order of several petabytes from retail stores efficiently. In this demonstration, we present people detection and crowd counting from retail store images using Deep Learning.

External Links

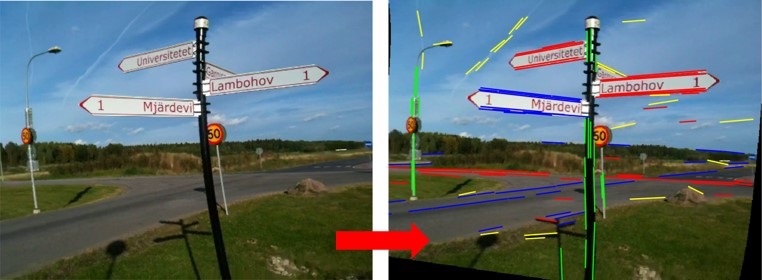

Real-time Computer Vision for Driver Assistive Systems

Cambridge Research Laboratory, Toshiba Research Europe Ltd

At the Cambridge Research Laboratory of Toshiba Research Europe Limited we conduct core research to advance scientific understanding, building the foundation of Toshiba’s future technologies. We are especially interested in real-time methods for computer vision, as our target applications are in the area of driver assistance and social infrastructure. In our presentation we will demonstrate our latest advancements in

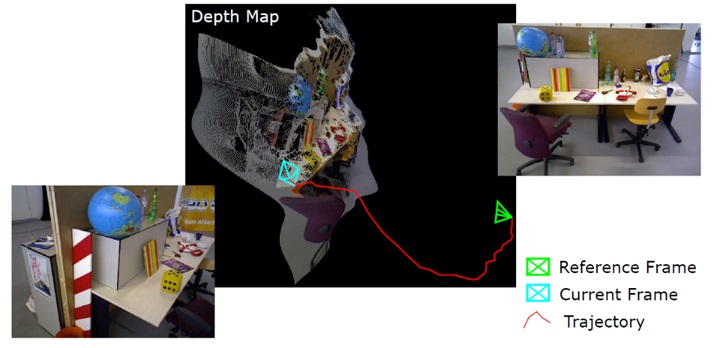

- Pose-graph optimization: Fundamental in SLAM, we formulate the problem of pose-graph optimization as a variational Bayesian approach with relative pose parameterisation, which facilitates real-time loop closure correction in quasi-constant time.

- Rolling shutter support: Although, rolling shutter cameras are cheap and widely used in real product technology there is limited related research in computer vision. Existing methods to compensate rolling shutter effects are painfully slow and distort the geometry in urban scenes. We propose a novel method which leverages geometric properties (vanishing directions) of the environment to correct shutter distortions in real-time.

- Monocular Odometry: Dense 3D reconstruction and camera pose estimation is challenging in real-time. We present our coarse-to-fine depth-perception which enables fast and more accurate simultaneous pose and depth estimation for frame-to-keyframe optimization.

External Links

Ibeo Scalable Multi Sensor Localization approach for Highly Automated Driving

Ibeo Automotive Systems GmbH

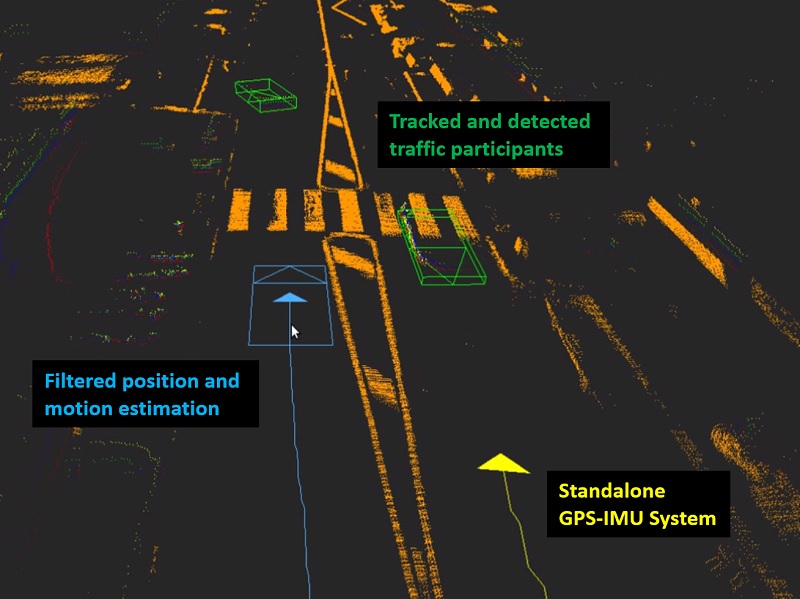

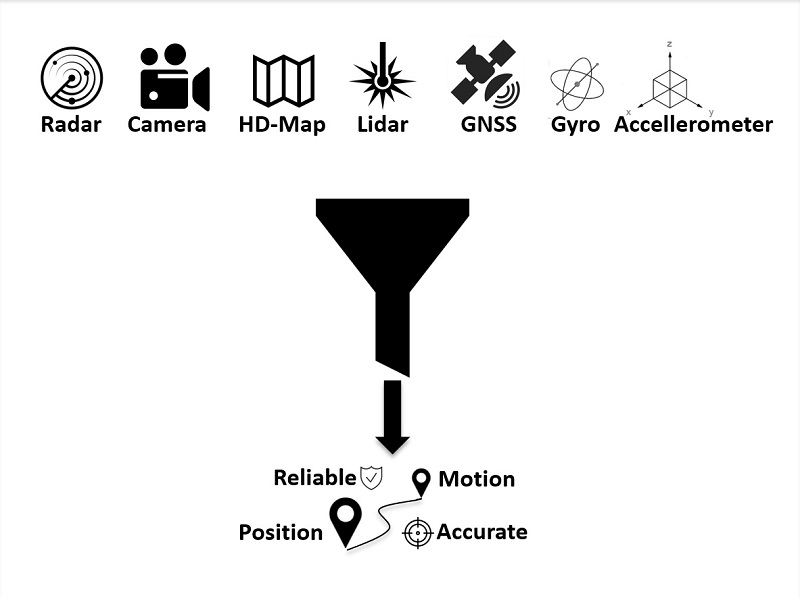

Ibeo’s key factor lies on the development of safety-related applications. Therefore, the three key elements for Mapping and Localization are availability, reliability and integrity. On the one hand, availability refers to the signal used for the localization, and on the other hand, to the status of the localization which must be indicated. Reliability stands for solutions for positioning and motion estimation which Ibeo offers to satisfy customers’ needs. Concerning integrity, even a distorted signal must be clearly pointed out.

For the process of creating a map, a vehicle equipped with various sensor technologies, such as Ibeo’s LIDAR sensors but also other sensing sources drives along a predefined route recording the perceived environment. The data are then processed to create a map not only the road information like curvature and lanes as well as landmarks like trees, sign posts etc., but also semantic information such as speed limits.

Once the map has been built, it can be implemented into the autonomously driving car. For the localization of the car with this map, various technologies are being used. The position of the car is determined with the landmarks on the map but also GPS Positioning. Furthermore, motion data of the ego vehicle are very important. These motion data like acceleration and yaw rate come from the vehicle odometry via CAN messages, from inertial measurement units as well as from LIDAR and visual odometry.

External Links

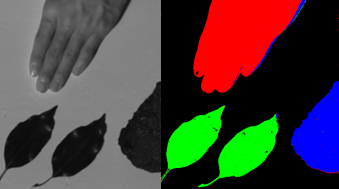

Real-time Multi-spectral Material Detection Camera System

Gifu University and ViewPLUS Inc.

We have developed a method to discriminate the human skin, the plant and the asphalt regions based on the spectral reflectance properties of the materials in the near-infrared (NIR) wavelength range. In order to detect one material from the image, the material is detected by using only two or three wavelengths corresponding to the absorption wavelength and the other wavelength based on the spectral reflectance property of the material. However considering discriminate more than two materials, materials are not always discriminated by using only absorption wavelengths of each material. We discriminated three materials regions by using multiple NIR wavelengths. In this proposed method, binary classifiers are generated of each material by Partial Least Squares (PLS) regression analysis, and three materials are discriminated by predicted values obtained by the binary classifiers.

We have constructed a real-time material discrimination system by using camera arrays, and we demonstrate identification of the human skin, the plant and the asphalt by using five band near infrared images.

Morpho Deep Learning System

—Demonstration of Deep Learning for Visual Inspection—

Morpho, Inc.

Morpho’s Deep Learning Technology

“Morpho Deep Learning System” is a machine learning system specialized for image recognition. By combining learning result in this system with the image recognition software “Morpho Deep Recognizer”, high accuracy and high speed image recognition processing can be achieved using deep learning technology. It can be incorporated into your products and services. “Morpho Deep Learning System” has multiple network models ready for learning image recognition as presets. This allows easy and quick development of image recognition software simply by collecting learning images. Morpho also supports development and performance evaluation of image recognition software, as well as consulting and system customization for performance improvement and optimization.

Exhibition points

We introduce image recognition technology for visual inspection applying deep learning technology. Our deep learning technology is capable of high-speed recognition and has a track record including commercial use.

Example scopes of Morpho’s deep learning

- Visual inspection field(small electronic parts, pharmaceuticals, building materials etc.)

- Internet service field (general image classification etc.)

- Security field (monitoring, human recognition, etc.)

- Health care field (X-ray, specimen examination, skin/scalp diagnosis etc.)

- Classification of harmful images

External Links

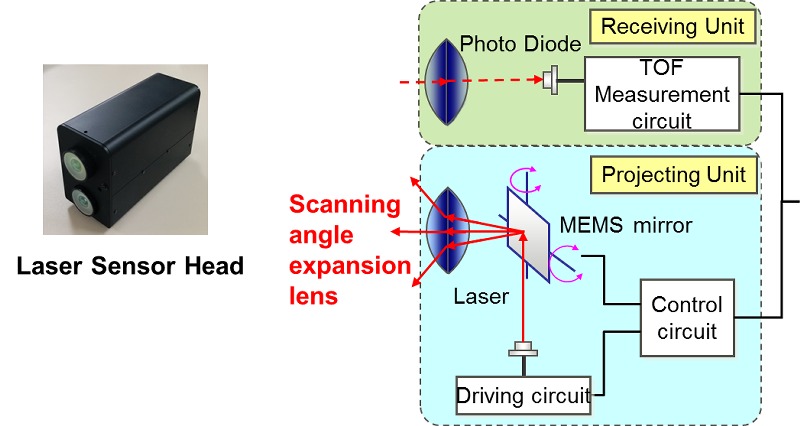

Super Wide-Angle 3D Laser Range Sensor

Fujitsu Laboratories LTD.

We have developed a completely new kind of laser sensor that measure human movements accurately. Given its wide scope and high sensitivity, a single sensor can be used to detect people and objects across a wide angle range.

Features of the Technology

Using a newly developed scanning angle expansion optical component, we were able to implement a compact laser sensor capable of measuring a broad range.

- With more than double the range (140 degrees both horizontally and vertically) of conventional laser sensor, wide-range measurements can be taken with a single sensor

- Despite its wide range, it is capable of object shape measurement with a high-resolution of 0.6 degrees

- Can be used at night or under direct sunlight thanks to near infrared-enabled active measurement

Applications

Sensors for detecting location and position of people (athletes, patients, customers, workers, etc.)

External Links

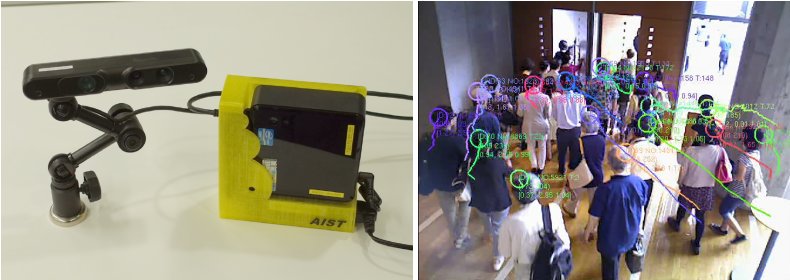

Pedestrian Tracking Using Multiple RGB-D Cameras

The National Institute of Advanced Industrial Science and Technology (AIST)

In computer vision research field, research on techniques of pedestrian tracking in a real environment are general purpose and can be used in various applications include for security at railroad crossings and airports, pedestrian trajectory analysis in a shopping complex, employee management in manufacturing and service industries, and detection of wandering patients in a nursing home for elderly. In this demonstration, we show pedestrian tracking using multiple RGB-D cameras and information integration technology by multicast from each camera. Tracking results in evacuation drills from a large-scale theater and crowded environments at fireworks festival are presented in video.